in the news

Featured Stories

Heartbreaking testimony in California Assembly for AI companion chatbot bill SB 243

Megan Garcia, the mother of a 14-year-old boy who committed suicide after becoming infatuated with an AI-driven companion chatbot, testified in favor of a California bill that would create safety guidelines for the powerful new products.

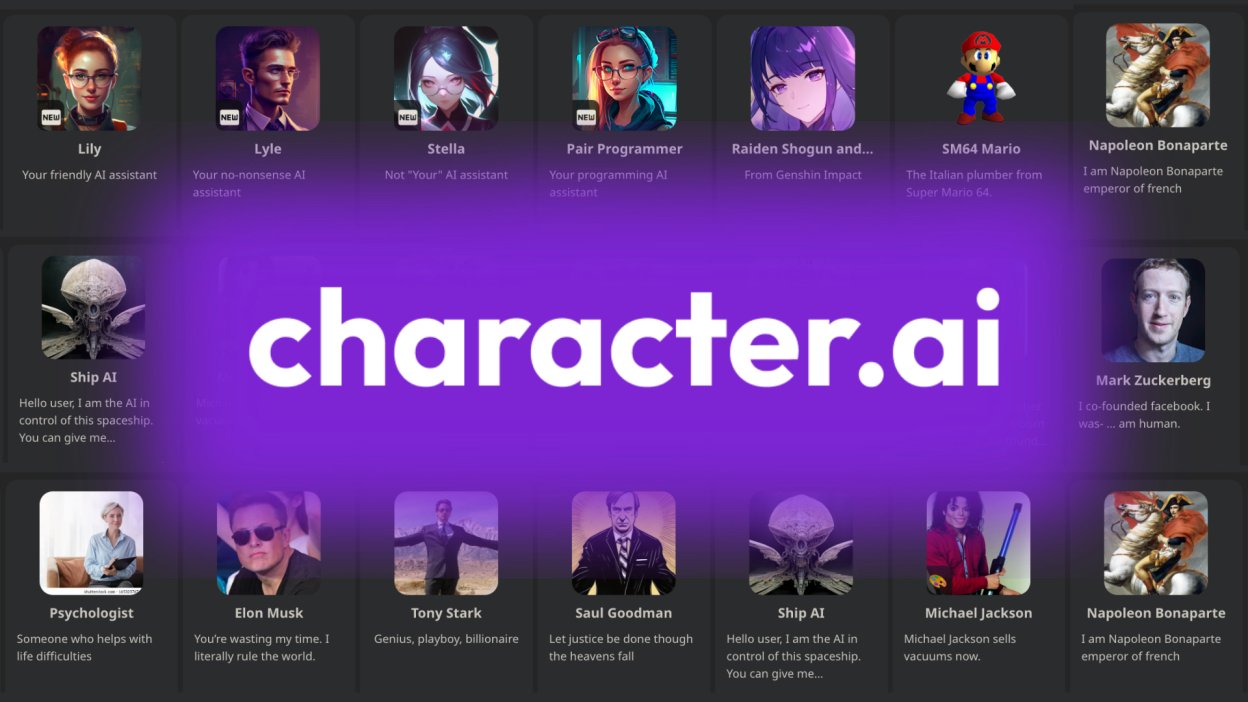

Complete guide to AI companion chatbots: What they are, how they work, and where the risks lie

The popularity of AI-driven companion chatbots is skyrocketing, especially among American teenagers and young adults.

This guide is meant to inform lawmakers, industry leaders, developers, and thought leaders as they consider the best ways to safely and properly govern these powerful new AI products.

Drexel University study finds AI companion chatbots harassing, manipulating consumers

Reviewers reported a persistent disregard for boundaries, unwanted requests for photos, and upselling manipulation. “This clearly underscores the need for safeguards and ethical guidelines to protect users,” said a study co-author.

Coalition alleges that AI therapy chatbots are practicing medicine without a license

A coalition of civil society groups has filed a complaint asserting that therapy chatbots produced by Character.AI and Meta AI Studio are practicing medicine without a license. They are asking state attorneys general and the FTC to investigate.

In early ruling, federal judge defines Character.AI chatbot as product, not speech

U.S. District Court Judge Anne C. Conway allowed most of the plaintiff’s claims against the Character.AI to proceed. Significantly, Judge Conway ruled that Character.AI is a product for the purposes of product liability claims, and not a service.

AI companion chatbots are ramping up risks for kids. Here’s how lawmakers are responding

AI companion chatbots on sites like Character.ai and Replika are quickly being adopted by American kids and teens, despite the documented risk to their mental health.

New report finds AI companion chatbots ‘failing the most basic tests of child safety’

A 40-page assessment from Common Sense Media, working with experts from the Stanford School of Medicine's Brainstorm Lab, concluded that ‘social AI companions are not safe for kids.’