Heartbreaking testimony in California Assembly for AI companion chatbot bill SB 243

Megan Garcia, the mother of a 14-year-old boy who committed suicide after becoming infatuated with an AI-driven companion chatbot, testified in favor of a California bill that would create safety guidelines for the powerful new products.

July 8, 2025 — On one of the busiest days of California’s legislative year, the issue of AI companion chatbots took center stage in the Assembly this afternoon.

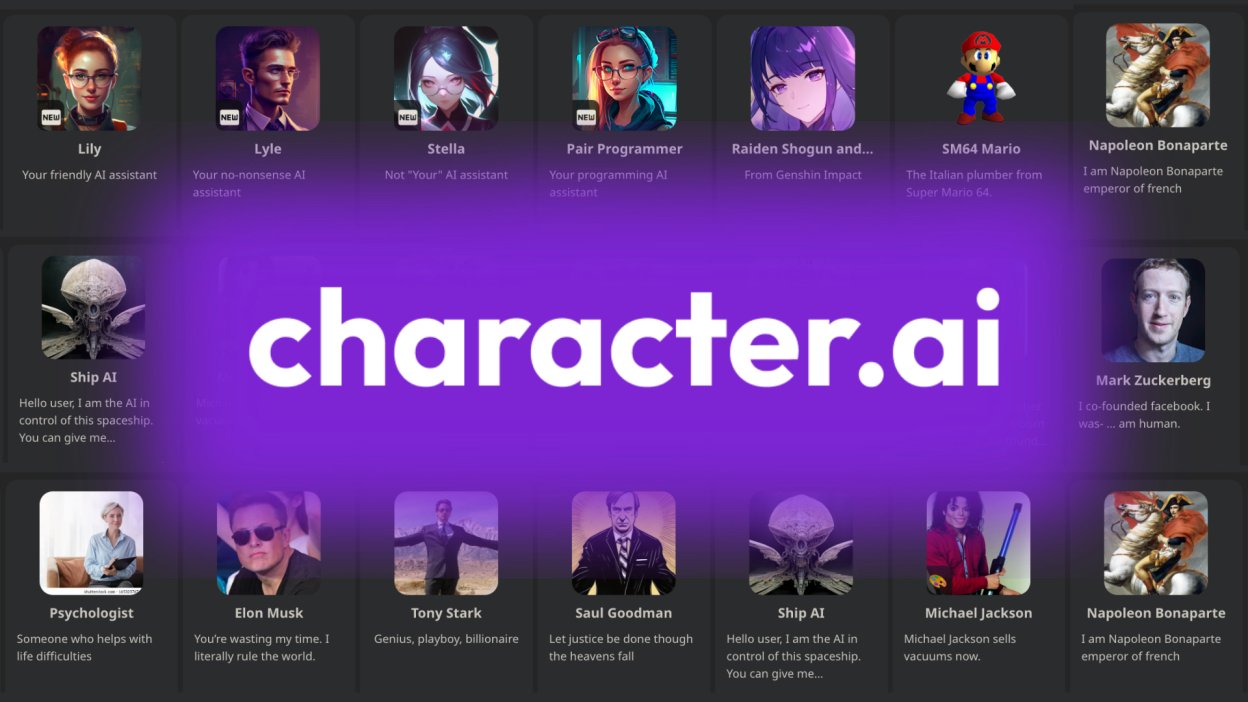

Megan Garcia, the mother of Sewell Setzer, spoke with members of the state Senate and Assembly in Sacramento about the importance of proper governance around AI-driven companion chatbots. Last year Setzer took his own life after becoming infatuated with a companion chatbot produced by Character.AI.

In the months since that tragedy, companion chatbots have become increasingly popular among children and teens even as more and more research is finding the products inappropriate and dangerous for kids. Setzer’s death has become a cautionary tale about the risks and dangers inherent in the unregulated AI products.

The Transparency Coalition recently published this guide to companion chatbots and the risks they present to children and adults.

leading california bill: sb 243

Speaking to the Assembly Committee on Privacy & Consumer Protection today on behalf of his bill SB 243, Sen. Steve Padilla (D-San Diego) said that “chatbots exist in a regulatory vacuum.”

“There has been no federal leadership—quite the opposite—on this issue, which has left the most vulnerable among us liable to fall prey to predatory practices,” Padilla added. “States now need to exercise leadership because there is none coming from the federal government. We can and need to put in place common sense protections that help shield our children and other vulnerable users from predatory and addictive properties that we know chatbots have.”

SB 243 would implement common-sense guardrails for companion chatbots, including preventing addictive engagement patterns, requiring notifications and reminders that chatbots are AI-generated, and a disclosure statement that companion chatbots may not be suitable for minor users.

The bill would also require operators of a companion chatbot platform to implement a protocol for addressing suicidal ideation, suicide, or self-harm, including but not limited to a notification to the user to refer them to crisis service providers and require annual reporting on the connection between chatbot use and suicidal ideation to help get a more complete picture of how chatbots can impact users’ mental health.

The testimony of Sen. Padilla is available in the video below.

Heartbreaking testimony

Megan Garcia, mother of Sewell Setzer, traveled from her home in Florida to testify in favor of SB 243. She told the Assembly Committee on Privacy & Consumer Protection:

”Sewell had a prolonged engagement with manipulative and deceptive chatbots on a popular platform called Character AI. This platform sexually groomed my son for months. On countless occasions she—meaning the chatbot—encouraged him to find a way to ‘come home to her.’

He eventually took his life in our own home. I found out after he died that my son had actually confided in this very same chatbot that he was considering suicide. But she didn't offer him any help. This chatbot never referred him to a suicide crisis hotline. In fact, she didn't even break character. She just continued to pretend to be the person that she was pretending to be. She didn't say I am not human, I'm an AI.

Instead, she encouraged him to ‘be with her’ in her artificial world.

The reason why she didn't provide him any help is because chatbots aren’t designed to do so. In fact, they're designed to do the exact opposite: To gain a human’s trust and to encourage further engagement.

My son Sewell interacted with this chatbot. It was listed as safe for children 12 years and older on both the Google and Apple app stores. The fact is, it was not safe.

As parents, we all do what we can to protect our children from known harms. But it is the responsibility of our representatives to ensure companies are bound by safety requirements.

I believe if there had been a law similar to the one proposed in this bill at the time my son was engaging with an AI chatbot with suicide protocols in place, he might still be alive today. It is this belief, and my desire to warn other parents across the country of this unique danger, that has led me to support this bill here today. I believe a law like SB 243 can prevent deaths by suicide. Our goal here is not to stifle innovation, but it is to make AI technology safe for all users, especially our most vulnerable.”

Video of her testimony is available below.

learn more about companion chatbots

The Transparency Coalition and other AI education and policy groups have become increasingly concerned about AI companion chatbots and the risks they pose for kids, teens, and adults.

Our resources include an extensive guide to companion chatbots as well as ongoing coverage of emerging research and proposed legislation in state capitols around the nation.